LegalRelectra: Mixed-domain Language Modeling for Processing Long Legal Documents

By Chloe Chen, based on work by Wenyue Hua, Yuchen Zhang, Zhe Chen, Josie Li and Melanie Weber

Background

Many legal services rely on processing and analyzing large collections of documents, so automating such tasks with NLP has become a growing area of research. There are two key challenges in developing legal-domain models: specialized terminology and passage length.

First, many popular language models, such as BERT or RoBERTa, are general-purpose models, which have limitations on processing specialized legal terminology and syntax. Some domain-specific pre-trained models have been developed, for example Clinical-BERT for medicine and Legal-BERT for the law, but legal documents may contain specialized vocabulary from mixed domains, such as medical terminology in addition to legal terminology in personal injury-related text.

Second, extracting key legal information, such as the plaintiff and defendant in a case,requires long-range text comprehension. However, most legal texts are much longer than 512 tokens, the typical limit for BERT-based models.

What is LegalRelectra?

To process specialized text from multiple domains, the paper describes a training procedure for mixed-domain language models. For the case of personal injury texts explored in the paper, the model is trained on mixed legal and medical domains.

Furthermore, to process long passages, the paper describes a novel model architecture (Relectra) that adapts the popular Electra model to the processing of long passages. This is done by replacing BERT generators and discriminators with Reformer, which can process text length up to 8,192 tokens.

The resulting language model, LegalRelectra, is well equipped to process long passages of mixed-domain text.

Testing the Model

In the paper, LegalRelectra is tested on three different stages: tokenizing, pre-training, and downstream performance (Named Entity Recognition).

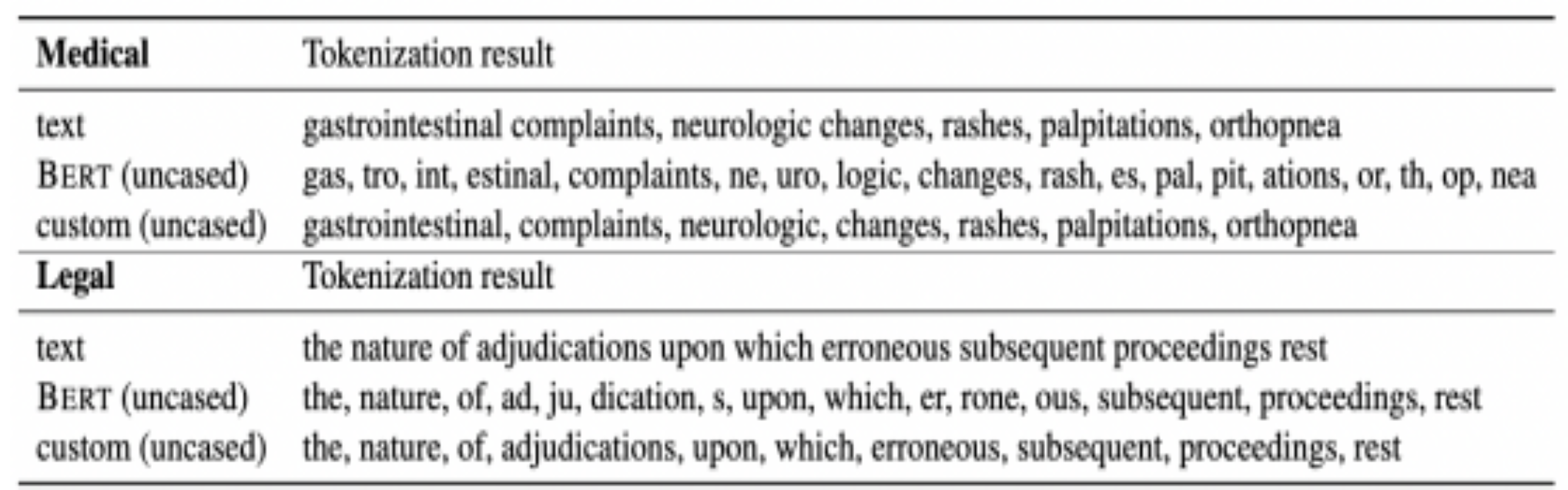

Tokenization refers to the process of splitting a stream of characters into words, and is crucial for good downstream performance. In particular, tokenization is especially important for processing domain-specific text containing a large amount of specialized terminology. Instead of using the standard BERT tokenizer, the paper trains a custom tokenizer that generates a more sensible tokenization of domain-specific text (see fig 1 for examples).

LegalRelectra is then pre-trained on legal, medical, and mixed-domain text. One LegalRelectra model is trained on tokens generated from the custom (domain-specific) tokenizer, while a second LegalRelectra model is trained using the standard (general-domain) BERT tokenizer. Then, both models are put to a pre-training task called Masked language modeling (MLM). In MLM, the input is corrupted by replacing some tokens with “[MASK]”, and then a model is trained to reconstruct the original tokens. The discriminator must then predict whether each token in the corrupted input was replaced by a generator sample or not.

Finally, LegalRelectra is tested on Named Entity Recognition, a downstream task where entities (i.e. words or phrases) are automatically labeled with predetermined labels. In this case, there are three legal domain labels (case type, plaintiff, defendant) and four labels for the mixed medical-legal domain (case type, plaintiff, defendant, injury). For this task, LegalRelectra is tested against three different baseline models: BERT, Clinical-BERT, and Legal-BERT.

Results

The performance of tokenizers is in general difficult to evaluate, as it is highly dependent on the downstream application. This paper compares the output of BERT and LegalRelectra tokenizers by analyzing the total number of recognized words and the number of total unique errors, as well as errors in medical and legal phrases. Results show that the custom LegalRelectra tokenizer performs better at recognizing words and has a smaller number of errors involving legal and medical terminology, suggesting a better performance on domain-specific texts.

n the pre-training stage, both the generator and discriminator model trained with the custom tokenizer performs better than the model trained with the Bert tokenizer toward the end of the training process.

Finally, in the downstream task, we observe that LegalRelectra outperforms the general-domain Bert model, as well as the specialized Legal-Bert and Clinical-Bert models on both legal and medical domains. Notably, LegalRelectra trained with the custom tokenizer also outperforms LegalRelectra trained with the Bert tokenizer, demonstrating the benefits of a domain-specific tokenizer. Overall, LegalRelectra outperforms both general-purpose models and legal-domain models on legal and mixed-domain Named Entity Recognition.